Architectural Fitness Functions: Building Effective Feedback Mechanisms for Quality Attributes

How software development teams can use fitness functions to establish feedback loops and evaluate quality attributes like performance and reliability, using atomic and holistic approaches.

Development teams build software products. Ideally, those teams are cross-functional and deliver (parts of) a system autonomously. Establishing feedback loops between teams and the system they build is crucial. As I wrote in “Course Correction: Implementing Effective Feedback”:

Without feedback, we cannot learn and cannot make decisions based on reality. We can only progress by guessing. Feedback gives us important input for course correction. How else would we know if users even interact with the new feature idea we released? Or how would we know if the new resilience concept we implemented enhances the reliability of our system?

You can gather feedback on multiple aspects. One of the most crucial are important quality attributes, like performance or reliability: Do we need to build a software service that is both highly scalable and highly available? Is high performance crucial for our product? We need to assess whether the system we build holds up to those aspects.

We need effective and (if possible) automated mechanisms, especially for quality attributes. The book "Building Evolutionary Architectures: Automated Software Governance" introduced a term for feedback mechanisms on quality attributes borrowed from evolutionary algorithms: (architectural) fitness functions.

In short, an (architectural) fitness function evaluates the system with regard to a quality attribute. You can distinguish fitness functions by the scope of their evaluation:

Atomic fitness functions evaluate a part of the system on a specific quality attribute, like measuring the length of methods within classes or monitoring the status of nodes within a system. Those fitness functions are easier to build, but they only give you a narrow view of the system's quality.

Fitness functions can also be holistic. They test the entire system or large parts of it and assess specific quality attributes. For example, they might measure response times or observe the system after being put under load. Holistic fitness functions are usually more complex and expensive to build.

In general, I prefer holistic fitness functions over atomic fitness functions. To avoid building and maintaining many costly, complex fitness functions, focus on your top quality attributes. Build holistic fitness functions only for those.

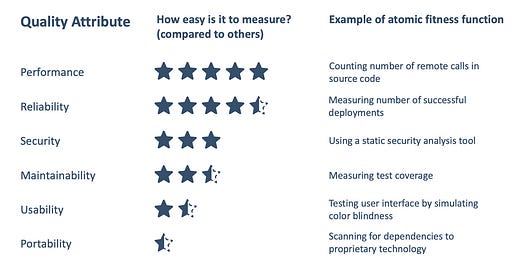

Next, let's discuss some quality attributes and examples of related fitness functions. The following figure summarizes the sections below:

Performance

Performance is an easy quality attribute to measure. Many tools can instrument your system, put it under artificial load, and measure its efficiency. See the section “Further Information: Tools” for more information.

You can implement holistic fitness functions by getting performance data from your production system. Alternatively, you can put a staging system under artificial load and observe its performance.

Example of an atomic fitness function

A team measures the number of calls their service performs to others by instrumenting their code and counting the number of remote calls. This helps the team keep remote calls to a minimum and question the necessity of introducing new ones.

Example of a holistic fitness function

A platform team provides a staging environment that resembles the production environment. Using load testing tools, they continuously create an artificial load on the system and measure the response times of critical workflows. They publish the results of these tests on a dashboard, allowing development teams to see potential performance impacts of their deployments.

Reliability

Similar to performance, you can measure the system's reliability directly, often in combination with load testing. What's different is that while performance can be measured in time, reliability needs a clearer definition. What functionality needs to work for your system to count as reliable? What workflows need to be available? You need to define reliability metrics in order to build an effective fitness function. You can draw on well-established metrics like MTBF (mean time between failures) or MTTR (mean time to recovery).

Considering failure is also essential for reliability. Your system can be reliable only if it withstands faults in your infrastructure, like failing nodes or high network latency. You can use fault injection tools to simulate faults and monitoring tools to observe the system's reaction. This method is sometimes called "Chaos Engineering."

Example of an atomic fitness function

A development team experiences a high deployment failure rate of a microservice they are maintaining. They begin to measure the number of failed deployments and aim to bring it under 2% by incrementally improving their deployment infrastructure.

Example of a holistic fitness function

A platform team for an online shop implements a reliability metric with synthetic monitoring. An automated actor chooses random products from a product catalog, puts them in a basket, and checks out the items. The process is considered successful when the automated actor receives an order confirmation. The metric is the percentage of successful attempts to complete this process. The fitness function incorporates chaos engineering to inject faults randomly into the underlying infrastructure, measuring the checkout process's resilience to infrastructure failures. The platform team publishes the metric on a dashboard accessible to development teams.

Security

You can put in place security fitness functions by checking the source code or observing the system's behavior.

Check the source code using security scanning tools to look for third-party libraries with known security issues. Observe the systems' behavior using scanning tools that check operating system and virtual network configurations or scan for open ports.

Yet, all these techniques are atomic. Holistic fitness functions for security are more difficult to build because there is no objective view of the entire system's security. The best thing you can do is perform regular security audits or penetration tests.

Example of an atomic fitness function

A development team uses an automated security testing tool within its deployment pipeline. The tool checks for suspicious dependencies and scans Infrastructure as Code for known security misconfigurations.

Example of a holistic fitness function

A security-enabling team performs internal security audits every two months. They gather all findings in an issue-tracking system and assign responsible teams to each issue.

Maintainability

There are lots of atomic fitness functions for this quality attribute. Typical source code analysis tools provide you with metrics like the number of code smells, duplicated code, or an approximation of the cognitive complexity of the code.

Holistic fitness functions for maintainability, however, are difficult to build. It is impossible to measure maintainability holistically by only looking at the source code. You need to take into consideration which kinds of changes the code is subject to most often. Or team dynamics and code ownership. That’s why you can’t calculate an objective “maintainability score.”

Example of an atomic fitness function

A development team uses a static code analysis tool to receive test coverage metrics when they push their code.

Example of a holistic fitness function

A development team conducts a monthly “issue storming” session, during which they brainstorm maintainability issues they have observed over the last month. They then gather the issues in a list and prioritize them for fixing.

Usability

Usability, like maintainability, is challenging to measure objectively. However, there are some proxy metrics you can use as atomic fitness functions. For example, you can use tools to check whether your system meets accessibility standards, like the Web Content Accessibility Guidelines (WCAG).

Example of an atomic fitness function

A development team uses a tool to simulate color blindness to check whether the user interface is accessible.

Example of a holistic fitness function

Usability experts invite a sample of the system's users to let them perform tasks within the system. They measure how fast the users can achieve their tasks and the errors they make most often.

Portability

Portability describes how easy it is to migrate the system from one environment to another. For example, think of switching cloud providers or migrating to a new hardware architecture. Portability is one of the hardest quality attributes to measure.

There are some things you can do, however: You can use atomic fitness functions to check for the absence of environment-specific code or patterns. You could also enforce an abstraction layer between the software and the environment. However, even these atomic fitness functions are complex to build and maintain.

Example of an atomic fitness function

A development team building an online application in the AWS cloud uses a code-scanning tool to check for the absence of AWS-specific container technologies. They check that they only use established open source (like Kubernetes) instead of cloud-specific technology (like AWS Lambda).

Example of a holistic fitness function

A development team performs an architecture review of their system every three months. They identify risks that potentially impede its portability. After the review, they gather all risks and implement mitigations.

Summary

Some quality attributes are easier to measure than others. Remember that the above list is not an exhaustive list of possible quality attributes or fitness functions you could build. It is essential to focus on your system's most important quality attributes and build valuable fitness functions for those.

Further Information: Tools

Below, you can find a selection of tools to implement fitness functions for your system.

Grafana K6

Use for: Performance, Reliability

You can use Grafana K6 to put the system under load and measure its availability and performance. You can also use it for synthetic monitoring and implement a continuous fitness function in your production environment.

Chaos Mesh

Use for: Reliability

Use Chaos Mesh to inject faults into your Kubernetes environment. You can make pods or nodes fail and randomly increase network latency or CPU load.

AWS Fault Injection Service

Use for: Reliability

With the AWS Fault Injection Service, you can inject faults into AWS services, similar to Chaos Mesh with Kubernetes.

Snyk

Use for: Security

Snyk is a commercial security scanning tool that you can integrate into your deployment pipeline. It scans your code for antipatterns and remarks suspicious configurations in your Infrastructure as Code.

Hint: Snyk is a commercial tool. Non-commercial and open-source tools are available, but they are often specific to frameworks and programming languages.

SonarQube

Use for: Maintainability

SonarQube measures source code, identifies code smells, and calculates maintainability metrics.

Teamscale

Use for: Maintainability

Teamscale, like SonarQube, performs source code analysis and calculates metrics like clone coverage. It can also check whether your code's modularization fits the one specified in architecture models.

Accessibility Checker

Use for: Usability

The Accessibility Checker checks your website against accessibility compliance rules like the WCAG.

AWS Config

Use for: Performance, Reliability, Security, Portability

Use the AWS Config to assess configurations of your cloud infrastructure.

LASR

Use for: Performance, Reliability, Security, Maintainability, Usability

The Lightweight Approach for Software Reviews (short LASR) is an approach to software reviews targeted to be performed by development teams themselves. You can use it as a fitness function for all quality attributes. However, keep in mind that it has weaknesses in objectivity since it relies on the knowledge and assumptions of the team performing the review (which, in this case, is the same team as building the system).